[2] Ceph Nautilus – konfiguracja klastra #2

6 lipca 2020ainstalujemy i skonfigurujemy teraz rozproszony system plików CEPH, aby skonfigurować klaster przechowywania.

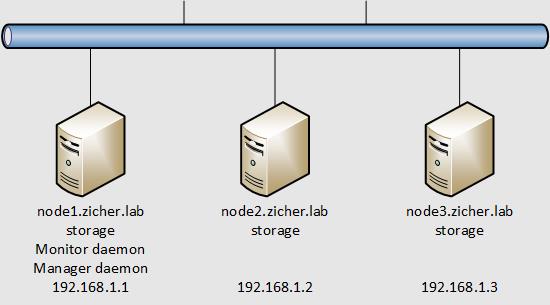

W tym przykładzie skonfigurujemy klaster Ceph z użyciem 3 węzłów, wykorzystane zostaną do przechowywania wolne urządzenia blokowe – tutaj [/dev/sdb].

Schemat sieci przedstawia się następująco.

[1] Skonfiguruj najpierw [Monitor Daemon] oraz [Manager Daemon].

[2] Skonfiguruj OSD (Object Storage Device) na pozostałych węzłach z [Admin Node].

Urządzenie blokowe (w tym przykładzie [/dev/sdb]) zostało sformatowane dla OSD, uważaj w czasie tej czynności, ponieważ wszystkie dane zostaną usunięte.

# jeżeli Firewalld jest uruchomiony zezwól na ruch na następujących portach [root@node1 ~]# for NODE in node1 node2 node3 do ssh $NODE "firewall-cmd --add-service=ceph --permanent; firewall-cmd --reload" done success success success success success success # skonfiguruj ustawienia dla OSD na wszystkich węzłach [root@node1 ~]# for NODE in node1 node2 node3 do if [ ! ${NODE} = "node1" ] then scp /etc/ceph/ceph.conf ${NODE}:/etc/ceph/ceph.conf scp /etc/ceph/ceph.client.admin.keyring ${NODE}:/etc/ceph scp /var/lib/ceph/bootstrap-osd/ceph.keyring ${NODE}:/var/lib/ceph/bootstrap-osd fi ssh $NODE \ "chown ceph. /etc/ceph/ceph.* /var/lib/ceph/bootstrap-osd/*; \ parted --script /dev/sdb 'mklabel gpt'; \ parted --script /dev/sdb "mkpart primary 0% 100%"; \ ceph-volume lvm create --data /dev/sdb1" done Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 2477fabd-4ab9-40ee-9e64-44346e1ec9ef Running command: /usr/sbin/vgcreate --force --yes ceph-68733a26-10c9-44c5-be48-0132c29daefd /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-68733a26-10c9-44c5-be48-0132c29daefd" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef ceph-68733a26-10c9-44c5-be48-0132c29daefd stdout: Logical volume "osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-68733a26-10c9-44c5-be48-0132c29daefd/osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/ln -s /dev/ceph-68733a26-10c9-44c5-be48-0132c29daefd/osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQAIaANfTN3hIRAAuWI6z1hpzbmcetubnjxXgA== stdout: creating /var/lib/ceph/osd/ceph-0/keyring added entity osd.0 auth(key=AQAIaANfTN3hIRAAuWI6z1hpzbmcetubnjxXgA==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 2477fabd-4ab9-40ee-9e64-44346e1ec9ef --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-68733a26-10c9-44c5-be48-0132c29daefd/osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef --path /var/lib/ceph/osd/ceph-0 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-68733a26-10c9-44c5-be48-0132c29daefd/osd-block-2477fabd-4ab9-40ee-9e64-44346e1ec9ef /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-2477fabd-4ab9-40ee-9e64-44346e1ec9ef stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-2477fabd-4ab9-40ee-9e64-44346e1ec9ef.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@0 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@0 --> ceph-volume lvm activate successful for osd ID: 0 --> ceph-volume lvm create successful for: /dev/sdb1 ceph.conf 100% 280 437.8KB/s 00:00 ceph.client.admin.keyring 100% 151 244.2KB/s 00:00 ceph.keyring 100% 129 234.2KB/s 00:00 Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 Running command: /usr/sbin/vgcreate --force --yes ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72 /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72 stdout: Logical volume "osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-1 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72/osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/ln -s /dev/ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72/osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-1/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-1/keyring --create-keyring --name osd.1 --add-key AQAOaANfwvYjCBAAhtxsLIYysPXPf3IpBbmgLQ== stdout: creating /var/lib/ceph/osd/ceph-1/keyring added entity osd.1 auth(key=AQAOaANfwvYjCBAAhtxsLIYysPXPf3IpBbmgLQ==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 1 --monmap /var/lib/ceph/osd/ceph-1/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-1/ --osd-uuid e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72/osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 --path /var/lib/ceph/osd/ceph-1 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-960d0d48-50ba-4911-9d7b-34bbb61ada72/osd-block-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/systemctl enable ceph-volume@lvm-1-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4 stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-1-e779f17f-8f6f-4913-b5fc-8fa8194c1bb4.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@1 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@1.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@1 --> ceph-volume lvm activate successful for osd ID: 1 --> ceph-volume lvm create successful for: /dev/sdb1 ceph.conf 100% 280 435.3KB/s 00:00 ceph.client.admin.keyring 100% 151 331.8KB/s 00:00 ceph.keyring 100% 129 276.6KB/s 00:00 Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 6b3740ee-722e-4f9c-ae80-6527834f5bf0 Running command: /usr/sbin/vgcreate --force --yes ceph-ee68113d-ab33-4a67-bb03-17397160e51c /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-ee68113d-ab33-4a67-bb03-17397160e51c" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0 ceph-ee68113d-ab33-4a67-bb03-17397160e51c stdout: Logical volume "osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-2 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-ee68113d-ab33-4a67-bb03-17397160e51c/osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0 Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/ln -s /dev/ceph-ee68113d-ab33-4a67-bb03-17397160e51c/osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0 /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-2/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-2/keyring --create-keyring --name osd.2 --add-key AQATaANfGx39NxAAf4M+MeJLuQgzk32rMXKo6A== stdout: creating /var/lib/ceph/osd/ceph-2/keyring added entity osd.2 auth(key=AQATaANfGx39NxAAf4M+MeJLuQgzk32rMXKo6A==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 2 --monmap /var/lib/ceph/osd/ceph-2/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-2/ --osd-uuid 6b3740ee-722e-4f9c-ae80-6527834f5bf0 --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-ee68113d-ab33-4a67-bb03-17397160e51c/osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0 --path /var/lib/ceph/osd/ceph-2 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-ee68113d-ab33-4a67-bb03-17397160e51c/osd-block-6b3740ee-722e-4f9c-ae80-6527834f5bf0 /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-2 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/systemctl enable ceph-volume@lvm-2-6b3740ee-722e-4f9c-ae80-6527834f5bf0 stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-2-6b3740ee-722e-4f9c-ae80-6527834f5bf0.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@2 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@2.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@2 --> ceph-volume lvm activate successful for osd ID: 2 --> ceph-volume lvm create successful for: /dev/sdb1 # potwierdź status klastra # jest OK, jeśli [HEALTH_OK] [root@node1 ~]# ceph -s cluster: id: f912f30f-1028-400c-8ee2-a440218b750e health: HEALTH_OK services: mon: 1 daemons, quorum node1 (age 25h) mgr: node1(active, since 23h) osd: 3 osds: 3 up (since 85s), 3 in (since 85s) data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 45 GiB / 48 GiB avail pgs: # potwierdź drzewo OSD [root@node1 ~]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.04678 root default -3 0.01559 host node1 0 hdd 0.01559 osd.0 up 1.00000 1.00000 -5 0.01559 host node2 1 hdd 0.01559 osd.1 up 1.00000 1.00000 -7 0.01559 host node3 2 hdd 0.01559 osd.2 up 1.00000 1.00000 [root@node1 ~]# ceph df RAW STORAGE: CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 48 GiB 45 GiB 4.7 MiB 3.0 GiB 6.26 TOTAL 48 GiB 45 GiB 4.7 MiB 3.0 GiB 6.26 POOLS: POOL ID STORED OBJECTS USED %USED MAX AVAIL