[9] Cephadm – konfiguracja klastra #1

21 lipca 2020Zainstalujemy i skonfigurujemy teraz Klaster Ceph z [Cephadm] – jest to narzędzie do rozmieszczania i zarządzania klastrem.

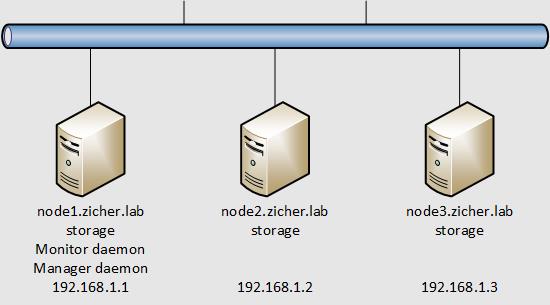

W tym przykładzie skonfigurujemy klaster Ceph z użyciem 3 węzłów, wykorzystane zostaną do przechowywania wolne urządzenia blokowe – tutaj [/dev/sdb].

Schemat sieci przedstawia się następująco.

[1] [Cephadm] wdraża klaster oparty na kontenerach. Dlatego należy najpierw zainstalować Podman na wszystkich węzłach.

[2] [Cephadm] używa Python3 do konfiguracji węzłów. Dlatego należy najpierw zainstalować go na wszystkich węzłach.

[3] Zainstaluj [Cephadm] na węźle, my w tym przykładzie zainstalujemy go na [node1].

[root@node1 ~]# dnf -y install centos-release-ceph-octopus epel-release [root@node1 ~]# dnf -y install cephadm

[4] Tworzymy nowy klaster Ceph

[root@node1 ~]# mkdir -p /etc/ceph [root@node1 ~]# cephadm bootstrap --allow-fqdn-hostname --mon-ip 192.168.1.1 INFO:cephadm:Verifying podman|docker is present... INFO:cephadm:Verifying lvm2 is present... INFO:cephadm:Verifying time synchronization is in place... INFO:cephadm:Unit chronyd.service is enabled and running INFO:cephadm:Repeating the final host check... INFO:cephadm:podman|docker (/usr/bin/podman) is present INFO:cephadm:systemctl is present INFO:cephadm:lvcreate is present INFO:cephadm:Unit chronyd.service is enabled and running INFO:cephadm:Host looks OK INFO:root:Cluster fsid: 43af2de0-cb74-11ea-8312-000c292b8c0c INFO:cephadm:Verifying IP 192.168.1.1 port 3300 ... INFO:cephadm:Verifying IP 192.168.1.1 port 6789 ... INFO:cephadm:Mon IP 192.168.1.1 is in CIDR network 192.168.1.0/24 INFO:cephadm:Pulling latest docker.io/ceph/ceph:v15 container... INFO:cephadm:Extracting ceph user uid/gid from container image... INFO:cephadm:Creating initial keys... INFO:cephadm:Creating initial monmap... INFO:cephadm:Creating mon... INFO:cephadm:Non-zero exit code 1 from /usr/bin/firewall-cmd --permanent --query-service ceph-mon INFO:cephadm:/usr/bin/firewall-cmd:stdout no INFO:cephadm:Enabling firewalld service ceph-mon in current zone... INFO:cephadm:Waiting for mon to start... INFO:cephadm:Waiting for mon... INFO:cephadm:Assimilating anything we can from ceph.conf... INFO:cephadm:Generating new minimal ceph.conf... INFO:cephadm:Restarting the monitor... INFO:cephadm:Setting mon public_network... INFO:cephadm:Creating mgr... INFO:cephadm:Non-zero exit code 1 from /usr/bin/firewall-cmd --permanent --query-service ceph INFO:cephadm:/usr/bin/firewall-cmd:stdout no INFO:cephadm:Enabling firewalld service ceph in current zone... INFO:cephadm:Non-zero exit code 1 from /usr/bin/firewall-cmd --permanent --query-port 8080/tcp INFO:cephadm:/usr/bin/firewall-cmd:stdout no INFO:cephadm:Enabling firewalld port 8080/tcp in current zone... INFO:cephadm:Non-zero exit code 1 from /usr/bin/firewall-cmd --permanent --query-port 8443/tcp INFO:cephadm:/usr/bin/firewall-cmd:stdout no INFO:cephadm:Enabling firewalld port 8443/tcp in current zone... INFO:cephadm:Non-zero exit code 1 from /usr/bin/firewall-cmd --permanent --query-port 9283/tcp INFO:cephadm:/usr/bin/firewall-cmd:stdout no INFO:cephadm:Enabling firewalld port 9283/tcp in current zone... INFO:cephadm:Wrote keyring to /etc/ceph/ceph.client.admin.keyring INFO:cephadm:Wrote config to /etc/ceph/ceph.conf INFO:cephadm:Waiting for mgr to start... INFO:cephadm:Waiting for mgr... INFO:cephadm:mgr not available, waiting (1/10)... INFO:cephadm:mgr not available, waiting (2/10)... INFO:cephadm:mgr not available, waiting (3/10)... INFO:cephadm:mgr not available, waiting (4/10)... INFO:cephadm:mgr not available, waiting (5/10)... INFO:cephadm:mgr not available, waiting (6/10)... INFO:cephadm:mgr not available, waiting (7/10)... INFO:cephadm:mgr not available, waiting (8/10)... INFO:cephadm:Enabling cephadm module... INFO:cephadm:Waiting for the mgr to restart... INFO:cephadm:Waiting for Mgr epoch 5... INFO:cephadm:Setting orchestrator backend to cephadm... INFO:cephadm:Generating ssh key... INFO:cephadm:Wrote public SSH key to to /etc/ceph/ceph.pub INFO:cephadm:Adding key to root@localhost's authorized_keys... INFO:cephadm:Adding host node1.zicher.lab... INFO:cephadm:Deploying mon service with default placement... INFO:cephadm:Deploying mgr service with default placement... INFO:cephadm:Deploying crash service with default placement... INFO:cephadm:Enabling mgr prometheus module... INFO:cephadm:Deploying prometheus service with default placement... INFO:cephadm:Deploying grafana service with default placement... INFO:cephadm:Deploying node-exporter service with default placement... INFO:cephadm:Deploying alertmanager service with default placement... INFO:cephadm:Enabling the dashboard module... INFO:cephadm:Waiting for the mgr to restart... INFO:cephadm:Waiting for Mgr epoch 13... INFO:cephadm:Generating a dashboard self-signed certificate... INFO:cephadm:Creating initial admin user... INFO:cephadm:Fetching dashboard port number... INFO:cephadm:Ceph Dashboard is now available at: URL: https://node1.zicher.lab:8443/ User: admin Password: lncofcvtvf INFO:cephadm:You can access the Ceph CLI with: sudo /usr/sbin/cephadm shell --fsid 43af2de0-cb74-11ea-8312-000c292b8c0c -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring INFO:cephadm:Please consider enabling telemetry to help improve Ceph: ceph telemetry on For more information see: https://docs.ceph.com/docs/master/mgr/telemetry/ INFO:cephadm:Bootstrap complete. # włączamy CLI Ceph [root@node1 ~]# alias ceph='cephadm shell -- ceph' [root@node1 ~]# echo "alias ceph='cephadm shell -- ceph'" >> ~/.bashrc [root@node1 ~]# ceph -v INFO:cephadm:Inferring fsid 43af2de0-cb74-11ea-8312-000c292b8c0c INFO:cephadm:Using recent ceph image docker.io/ceph/ceph:v15 ceph version 15.2.4 (7447c15c6ff58d7fce91843b705a268a1917325c) octopus (stable) # jest wszystko OK, jeśli pokaże się [HEALTH_WARN], ponieważ nie mamy dodanych OSD jeszcze [root@node1 ~]# ceph -s INFO:cephadm:Inferring fsid 43af2de0-cb74-11ea-8312-000c292b8c0c INFO:cephadm:Using recent ceph image docker.io/ceph/ceph:v15 cluster: id: 43af2de0-cb74-11ea-8312-000c292b8c0c health: HEALTH_WARN Reduced data availability: 1 pg inactive OSD count 0 < osd_pool_default_size 3 services: mon: 1 daemons, quorum node1.zicher.lab (age 5m) mgr: node1.zicher.lab.ntlwxo(active, since 4m) osd: 0 osds: 0 up, 0 in data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs: 100.000% pgs unknown 1 unknown # kontenery są uruchomione jako usługi [root@node1 ~]# podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 78b025667823 docker.io/ceph/ceph-grafana:latest -c %u %g /var/lib... 1 second ago Up Less than a second ago zen_heyrovsky 9e089105a6b9 docker.io/prom/alertmanager:v0.20.0 --config.file=/et... 2 seconds ago Up 2 seconds ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-alertmanager.node1 872a98d77ef0 docker.io/prom/prometheus:v2.18.1 --config.file=/et... 58 seconds ago Up 57 seconds ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-prometheus.node1 ce42d9081797 docker.io/prom/node-exporter:v0.18.1 --no-collector.ti... About a minute ago Up About a minute ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-node-exporter.node1 597b96e0265b docker.io/ceph/ceph-grafana:latest /bin/bash About a minute ago Up About a minute ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-grafana.node1 64397312e8ef docker.io/ceph/ceph:v15 -n client.crash.n... 3 minutes ago Up 3 minutes ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-crash.node1 740d813b9005 docker.io/ceph/ceph:v15 -n mgr.node1.zich... 6 minutes ago Up 6 minutes ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-mgr.node1.zicher.lab.ntlwxo 8317a09f839e docker.io/ceph/ceph:v15 -n mon.node1.zich... 6 minutes ago Up 6 minutes ago ceph-43af2de0-cb74-11ea-8312-000c292b8c0c-mon.node1.zicher.lab # demony systemd dla kontenerów [root@node1 ~]# systemctl status ceph-* --no-pager

[…] [1] Skonfiguruj najpierw podstawowe ustawienia Cephadm. […]