[2] Konfiguracja klastra #2

14 lipca 2020Zainstalujemy i skonfigurujemy teraz rozproszony system plików CEPH, aby skonfigurować klaster przechowywania.

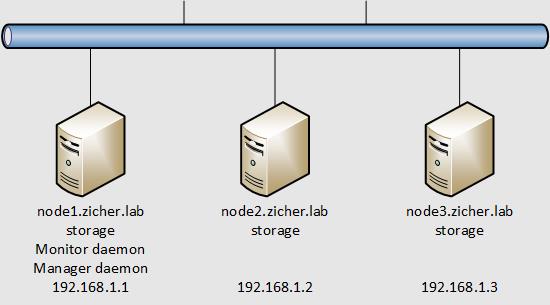

W tym przykładzie skonfigurujemy klaster Ceph z użyciem 3 węzłów, wykorzystane zostaną do przechowywania wolne urządzenia blokowe – tutaj [/dev/sdb].

Schemat sieci przedstawia się następująco.

[1] Skonfiguruj [Monitor Daemon] oraz [Manager Daemon] – informacje na ten temat tutaj.

[2] Skonfiguruj OSD (Object Storage Device) na wszystkich węzłach z Admin Node. Urządzenie blokowe [/dev/sdb] zostało sformatowane dla OSD. UWAGA! Wszystkie dane zostaną usunięte!

# jeśli Firewalld jest uruchomiony zezwólmy na następujące usługi [root@node1 ~]# for NODE in node1 node2 node3 do ssh $NODE "firewall-cmd --add-service=ceph --permanent; firewall-cmd --reload" done success success success success success success # skonfiguruj opcje dla OSD na węzłach [root@node1 ~]# for NODE in node1 node2 node3 do if [ ! ${NODE} = "node01" ] then scp /etc/ceph/ceph.conf ${NODE}:/etc/ceph/ceph.conf scp /etc/ceph/ceph.client.admin.keyring ${NODE}:/etc/ceph scp /var/lib/ceph/bootstrap-osd/ceph.keyring ${NODE}:/var/lib/ceph/bootstrap-osd fi ssh $NODE \ "chown ceph. /etc/ceph/ceph.* /var/lib/ceph/bootstrap-osd/*; \ parted --script /dev/sdb 'mklabel gpt'; \ parted --script /dev/sdb "mkpart primary 0% 100%"; \ ceph-volume lvm create --data /dev/sdb1" done ceph.conf 100% 281 8.7KB/s 00:00 ceph.client.admin.keyring 100% 151 263.1KB/s 00:00 ceph.keyring 100% 129 47.0KB/s 00:00 Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 1b98de09-4e67-442c-859e-e08be6d42315 Running command: /usr/sbin/vgcreate --force --yes ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-1b98de09-4e67-442c-859e-e08be6d42315 ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a stdout: Logical volume "osd-block-1b98de09-4e67-442c-859e-e08be6d42315" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a/osd-block-1b98de09-4e67-442c-859e-e08be6d42315 Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/ln -s /dev/ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a/osd-block-1b98de09-4e67-442c-859e-e08be6d42315 /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQBwxQ1fiDokBBAAibZnMPoqva6Nj2gJS/EK4g== stdout: creating /var/lib/ceph/osd/ceph-0/keyring added entity osd.0 auth(key=AQBwxQ1fiDokBBAAibZnMPoqva6Nj2gJS/EK4g==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 1b98de09-4e67-442c-859e-e08be6d42315 --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a/osd-block-1b98de09-4e67-442c-859e-e08be6d42315 --path /var/lib/ceph/osd/ceph-0 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-ef9a2351-3afc-4e5e-ae67-5aa2bd27125a/osd-block-1b98de09-4e67-442c-859e-e08be6d42315 /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 Running command: /usr/bin/systemctl enable ceph-volume@lvm-0-1b98de09-4e67-442c-859e-e08be6d42315 stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-1b98de09-4e67-442c-859e-e08be6d42315.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@0 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@0 --> ceph-volume lvm activate successful for osd ID: 0 --> ceph-volume lvm create successful for: /dev/sdb1 ceph.conf 100% 281 10.9KB/s 00:00 ceph.client.admin.keyring 100% 151 66.5KB/s 00:00 ceph.keyring 100% 129 211.3KB/s 00:00 Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 125fb585-8529-4daa-9c8e-6f23f59af709 Running command: /usr/sbin/vgcreate --force --yes ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6 /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-125fb585-8529-4daa-9c8e-6f23f59af709 ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6 stdout: Logical volume "osd-block-125fb585-8529-4daa-9c8e-6f23f59af709" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-1 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6/osd-block-125fb585-8529-4daa-9c8e-6f23f59af709 Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/ln -s /dev/ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6/osd-block-125fb585-8529-4daa-9c8e-6f23f59af709 /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-1/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-1/keyring --create-keyring --name osd.1 --add-key AQCAxQ1f/iGSIRAAMk/EIoepjsWoEniLPx6j4Q== stdout: creating /var/lib/ceph/osd/ceph-1/keyring added entity osd.1 auth(key=AQCAxQ1f/iGSIRAAMk/EIoepjsWoEniLPx6j4Q==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 1 --monmap /var/lib/ceph/osd/ceph-1/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-1/ --osd-uuid 125fb585-8529-4daa-9c8e-6f23f59af709 --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6/osd-block-125fb585-8529-4daa-9c8e-6f23f59af709 --path /var/lib/ceph/osd/ceph-1 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-0d2ccbf7-ba94-4f6a-8802-3dd84d1957e6/osd-block-125fb585-8529-4daa-9c8e-6f23f59af709 /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-1/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-1 Running command: /usr/bin/systemctl enable ceph-volume@lvm-1-125fb585-8529-4daa-9c8e-6f23f59af709 stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-1-125fb585-8529-4daa-9c8e-6f23f59af709.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@1 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@1.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@1 --> ceph-volume lvm activate successful for osd ID: 1 --> ceph-volume lvm create successful for: /dev/sdb1 ceph.conf 100% 281 8.2KB/s 00:00 ceph.client.admin.keyring 100% 151 255.5KB/s 00:00 ceph.keyring 100% 129 5.3KB/s 00:00 Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new b521b554-0005-4acd-b77f-d3692ee695ea Running command: /usr/sbin/vgcreate --force --yes ceph-a072c849-c56f-4317-b095-0c731ae98200 /dev/sdb1 stdout: Physical volume "/dev/sdb1" successfully created. stdout: Volume group "ceph-a072c849-c56f-4317-b095-0c731ae98200" successfully created Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-b521b554-0005-4acd-b77f-d3692ee695ea ceph-a072c849-c56f-4317-b095-0c731ae98200 stdout: Logical volume "osd-block-b521b554-0005-4acd-b77f-d3692ee695ea" created. Running command: /usr/bin/ceph-authtool --gen-print-key Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-2 Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-a072c849-c56f-4317-b095-0c731ae98200/osd-block-b521b554-0005-4acd-b77f-d3692ee695ea Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/ln -s /dev/ceph-a072c849-c56f-4317-b095-0c731ae98200/osd-block-b521b554-0005-4acd-b77f-d3692ee695ea /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-2/activate.monmap stderr: got monmap epoch 2 Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-2/keyring --create-keyring --name osd.2 --add-key AQCIxQ1fKjbGAxAAYgi+9uhqo07zFA8GqzWbXg== stdout: creating /var/lib/ceph/osd/ceph-2/keyring added entity osd.2 auth(key=AQCIxQ1fKjbGAxAAYgi+9uhqo07zFA8GqzWbXg==) Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/keyring Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/ Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 2 --monmap /var/lib/ceph/osd/ceph-2/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-2/ --osd-uuid b521b554-0005-4acd-b77f-d3692ee695ea --setuser ceph --setgroup ceph --> ceph-volume lvm prepare successful for: /dev/sdb1 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-a072c849-c56f-4317-b095-0c731ae98200/osd-block-b521b554-0005-4acd-b77f-d3692ee695ea --path /var/lib/ceph/osd/ceph-2 --no-mon-config Running command: /usr/bin/ln -snf /dev/ceph-a072c849-c56f-4317-b095-0c731ae98200/osd-block-b521b554-0005-4acd-b77f-d3692ee695ea /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-2/block Running command: /usr/bin/chown -R ceph:ceph /dev/dm-3 Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2 Running command: /usr/bin/systemctl enable ceph-volume@lvm-2-b521b554-0005-4acd-b77f-d3692ee695ea stderr: Created symlink /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-2-b521b554-0005-4acd-b77f-d3692ee695ea.service → /usr/lib/systemd/system/ceph-volume@.service. Running command: /usr/bin/systemctl enable --runtime ceph-osd@2 stderr: Created symlink /run/systemd/system/ceph-osd.target.wants/ceph-osd@2.service → /usr/lib/systemd/system/ceph-osd@.service. Running command: /usr/bin/systemctl start ceph-osd@2 --> ceph-volume lvm activate successful for osd ID: 2 --> ceph-volume lvm create successful for: /dev/sdb1 # potwierdź status klastra # jest ok, gdy [HEALTH_OK] [root@node1 ~]# ceph -s cluster: id: 8a31deb0-114c-4639-8050-981f47e5403c health: HEALTH_OK services: mon: 1 daemons, quorum node1 (age 18h) mgr: node1(active, since 18h) osd: 3 osds: 3 up (since 13m), 3 in (since 13m) data: pools: 1 pools, 1 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 147 GiB / 150 GiB avail pgs: 1 active+clean # potwierdź drzewo OSD [root@node1 ~]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 0.14639 root default -3 0.04880 host node1 0 hdd 0.04880 osd.0 up 1.00000 1.00000 -5 0.04880 host node2 1 hdd 0.04880 osd.1 up 1.00000 1.00000 -7 0.04880 host node3 2 hdd 0.04880 osd.2 up 1.00000 1.00000 [root@node1 ~]# ceph df --- RAW STORAGE --- CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 150 GiB 147 GiB 7.6 MiB 3.0 GiB 2.01 TOTAL 150 GiB 147 GiB 7.6 MiB 3.0 GiB 2.01 --- POOLS --- POOL ID STORED OBJECTS USED %USED MAX AVAIL device_health_metrics 1 0 B 0 0 B 0 46 GiB [root@node1 ~]# ceph osd df ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS 0 hdd 0.04880 1.00000 50 GiB 1.0 GiB 2.5 MiB 0 B 1 GiB 49 GiB 2.01 1.00 1 up 1 hdd 0.04880 1.00000 50 GiB 1.0 GiB 2.5 MiB 0 B 1 GiB 49 GiB 2.01 1.00 1 up 2 hdd 0.04880 1.00000 50 GiB 1.0 GiB 2.4 MiB 0 B 1 GiB 49 GiB 2.01 1.00 1 up TOTAL 150 GiB 3.0 GiB 7.4 MiB 0 B 3 GiB 147 GiB 2.01 MIN/MAX VAR: 1.00/1.00 STDDEV: 0