[7] MicroK8s – Fluentd

21 lutego 2021Włączymy dodatek Fluentd aby skonfigurować Elasticsearch, Fluentd, Kibana (EFK Stack) na klastrze MicroK8s.

[1] Włącz wbudowany w MicroK8s Fluentd na głównym węźle.

[root@vlsr01 ~]# microk8s enable fluentd dns Enabling Fluentd-Elasticsearch Labeling nodes node/vlsr02.zicher.lab labeled node/vlsr01.zicher.lab labeled Addon dns is already enabled. --allow-privileged=true service/elasticsearch-logging created serviceaccount/elasticsearch-logging created clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created statefulset.apps/elasticsearch-logging created configmap/fluentd-es-config-v0.2.0 created serviceaccount/fluentd-es created clusterrole.rbac.authorization.k8s.io/fluentd-es created clusterrolebinding.rbac.authorization.k8s.io/fluentd-es created daemonset.apps/fluentd-es-v3.0.2 created deployment.apps/kibana-logging created service/kibana-logging created Fluentd-Elasticsearch is enabled Addon dns is already enabled. [root@vlsr01 ~]# microk8s kubectl get services -n kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE metrics-server ClusterIP 10.152.183.254 <none> 443/TCP 2d13h kubernetes-dashboard ClusterIP 10.152.183.77 <none> 443/TCP 2d13h dashboard-metrics-scraper ClusterIP 10.152.183.28 <none> 8000/TCP 2d13h kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 2d13h elasticsearch-logging ClusterIP 10.152.183.241 <none> 9200/TCP 77s kibana-logging ClusterIP 10.152.183.236 <none> 5601/TCP 76s [root@vlsr01 ~]# microk8s kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-node-vq9h7 1/1 Running 0 2d13h kube-system metrics-server-8bbfb4bdb-x2rrq 1/1 Running 2 2d13h kube-system calico-kube-controllers-847c8c99d-mv2g6 1/1 Running 2 2d15h default test-nginx-59ffd87f5-6gpgm 1/1 Running 2 2d15h kube-system calico-node-574jj 1/1 Running 2 2d15h kube-system kubernetes-dashboard-7ffd448895-fq852 1/1 Running 0 18h kube-system dashboard-metrics-scraper-6c4568dc68-mh6jd 1/1 Running 0 18h kube-system hostpath-provisioner-5c65fbdb4f-5qm5d 1/1 Running 0 18h kube-system coredns-86f78bb79c-kptf4 1/1 Running 0 18h default nginx-zl 1/1 Running 0 11h container-registry registry-9b57d9df8-qvrwr 1/1 Running 0 11h kube-system elasticsearch-logging-0 1/1 Running 0 11m kube-system fluentd-es-v3.0.2-bsmkw 1/1 Running 3 11m kube-system kibana-logging-7cf6dc4687-wf5rw 1/1 Running 6 11m [root@vlsr01 ~]# microk8s kubectl cluster-info Kubernetes control plane is running at https://127.0.0.1:16443 Metrics-server is running at https://127.0.0.1:16443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy CoreDNS is running at https://127.0.0.1:16443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy Elasticsearch is running at https://127.0.0.1:16443/api/v1/namespaces/kube-system/services/elasticsearch-logging/proxy Kibana is running at https://127.0.0.1:16443/api/v1/namespaces/kube-system/services/kibana-logging/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. # uruchom kube proxy # zmień prawa dostępu na takie jakie chcesz [root@vlsr01 ~]# microk8s kubectl proxy --address=0.0.0.0 --accept-hosts=.* & # jeśli jest uruchomiony Firewalld zezwól na ruch na tych portach [root@vlsr01 ~]# firewall-cmd --add-port=8001/tcp --permanent [root@vlsr01 ~]# firewall-cmd –reload

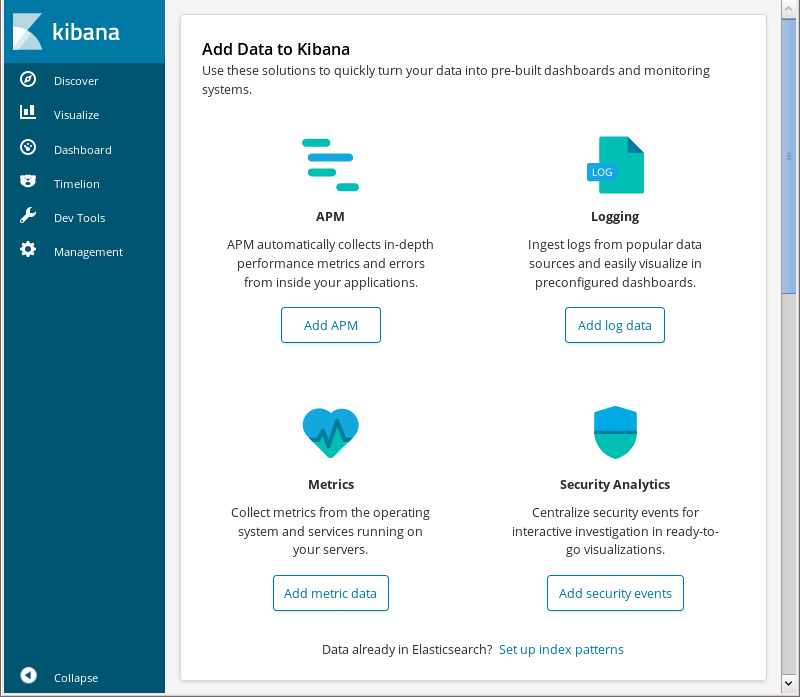

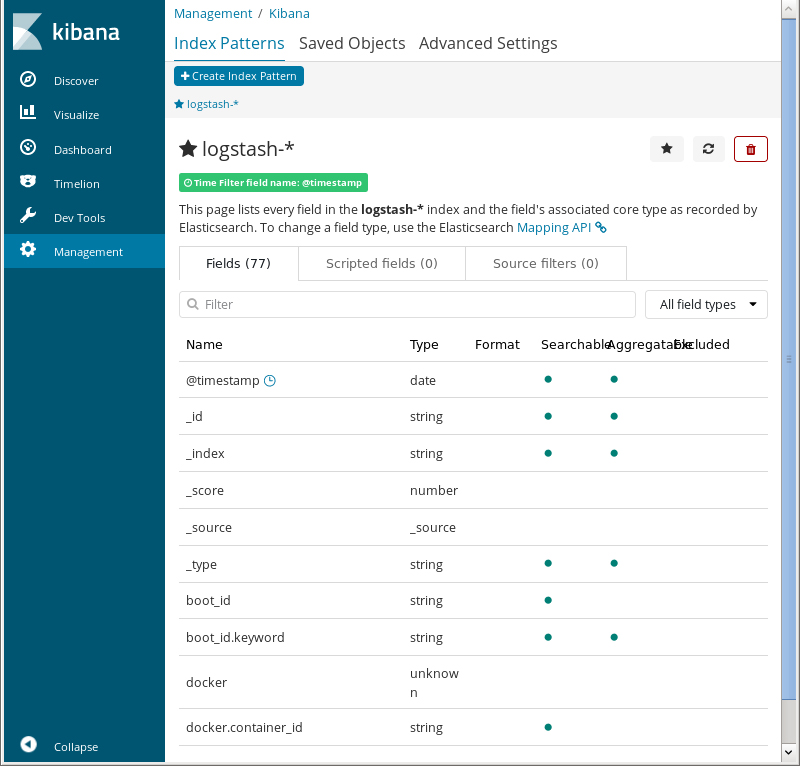

[2] Wejdź na [https://(MicroK8s_Primary_Node IP lub hostanme):8001/api/v1/namespaces/kube-system/services/kibana-logging/proxy] w przeglądarce, z komputera w lokalnej sieci. Jeśli wszystko jest OK, powinien pokazać się panel WWW Kibana.